With the democratisation and the ease of using generative AI in SEO, it’s no wonder it’s tempting to just leave it all to LLMs.

But should you?

The short answer is: NO

Generative AI in SEO presents both incredible opportunities and serious risks. I’ll be talking extensively about this at the upcoming Women in Tecnical SEO Fest. For now, here are a few thoughts on the issues at hand and how to scale responsibly.

The hype vs. the reality of generative AI in SEO

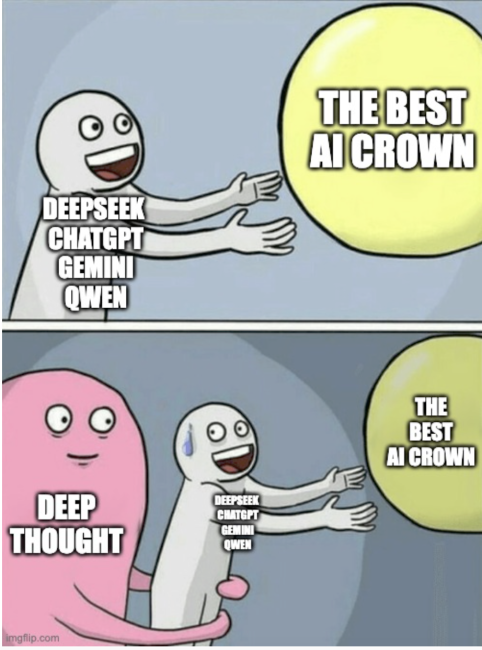

Anyone reading Ed Zitron will know all about the hype surrounding AI and why some of it is, let’s face it, hot air. The recent development of the newest Deepseek model is just another nail in the coffin.

LLMs won’t save the world (if anything, it might make things worse), and AGI is a long, long way away, if possible at all. This doesn’t mean generative AI is useless.

LLMs like ChatGPT and Gemini are making SEO more efficient than ever. We can now:

- Automate some of our daily SEO processes

- Use AI for keyword clustering and cannibalisation analysis

- Enhance on-page optimisation with AI-powered suggestions

- Scale SEO efforts for brands with limited budgets

But with this rapid AI automation comes serious concerns. To name just a few:

Environmental impact

Most AI models consume vast resources, raising concerns about sustainability.

A single AI model can produce as much carbon dioxide as five cars over their lifetime. LLMs are resource-intensive, requiring massive computing power and data storage. I worry even when the models are supposedly much better, as reported by DeepSeek and Alibaba. Partly because of how large scale the use seems to be. DeepSeek has done a stellar job on its PR. I would argue better than any of the US-based companies.

Most AI models consume vast resources, raising concerns about sustainability.

A single AI model can produce as much carbon dioxide as five cars over their lifetime. LLMs are resource-intensive, requiring massive computing power and data storage. I worry even when the models are supposedly much better, as reported by DeepSeek and Alibaba. Partly because of how large scale the use seems to be. DeepSeek has done a stellar job on its PR. I would argue better than any of the US-based companies.

The other day I had a chat in the cab with the driver. He wanted to know what I thought about AI, as he now has DeepSeek on his phone. He wasn’t an engineer driving a cab like many here (another story) but a guy with a job with nothing to do with tech and no experience in it. And now he is interested and uses it. He didn’t know ChatGPT let alone any of the others. What is the impact of this mass use on the environment?

Ethical concerns

They are known to hallucinate and harbour biases – this is not such a huge issue if you use them to write content, but can you imagine, let’s say, the NHS used them in their decision making? You won’t need to go far to find examples where generative AI mistakes led to serious harm. A couple of recent examples include The Microsoft-powered bot that advised bosses to take workers’ tips and landlords to discriminate based on source of income and the iTutor recruiting software that turned out to be agist.

Trust and privacy issues

The newest DeepSeek V3 model and the recently launched Alibaba Qwen 2.5 present a bit of an opportunity to deal with some of the environmental and sustainability issues at least. Great article here by Ben Thomson explaining the ins and outs of the V3 model.

The challenge here is the privacy side. DeepSeek’s newest model has already been hacked. Only days after release, there are security questions around Qwen as well. Not that I’m saying the US models are bulletproof, either!

For anyone interested in the security challenges around China’s LLM models, have a read at the excellent analysis by Ellie Berreby.

Presumably, running these models on your own local environment or, ideally a Docker Container would be a good option to solve some of the issues here, but much more technical and not accessible to all. On my list to try!

Polluting the digital ecosystem

AI-generated content pollutes the digital ecosystem with low-quality dross, forcing Google and other search engines to consistently play whack-a-mole. Personally, I don’t want to be part of an ecosystem that is based on churning out the same information everyone else is serving. If the human race and knowledge were developed in the same way, we would still be living in caves!

So, where do we draw the line between using AI to scale SEO and the responsibility we have as users and creators of LLM?

For many brands, the cost is limited to the impact on the company, i.e. don’t churn out AI content because you will get spanked by Google. And, even for those who try, while sometimes it takes a bit of time for Google to catch up, they do eventually.

We’ve seen this with the rollout of HCU, where some websites were unintended victims, but a lot of them were on the chopping board for all the right reasons.

As LLM content becomes even more widespread, we can expect more updates, more frequently and with many more websites on the chopping board.

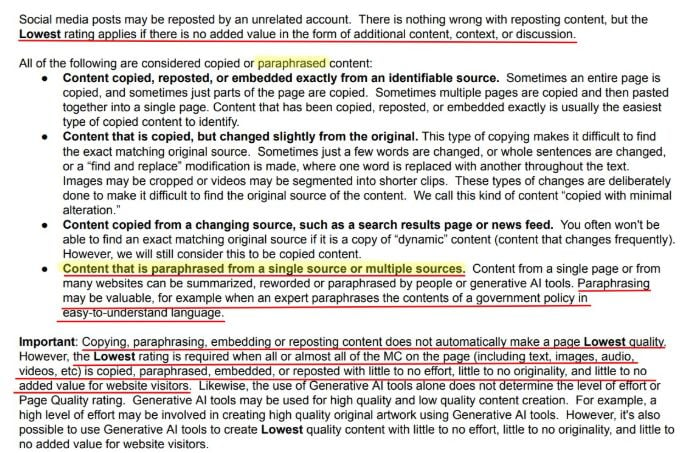

There is no doubt that Google is rightfully cracking down on AI-generated spam. In 2024, they updated their Spam Policy, and this year, we’ve had several updates of the Quality Raters Guidelines.

It is clear that the problem isn’t using LLMs. It’s using them badly!

We’ve seen this scenario with some of the clients who came to us, hoping we can diagnose why their results dropped following the recent updates. The impact on their business was huge!

But what also concerned me, probably even more, is the impact that these practices have on the world we live in.

Pumping out AI-generated dross is not only bad for the company, but it’s bad for us, as a planet, and as users of the internet!

This is why scaling SEO with AI requires a responsible framework—one that balances automation, human oversight, and consideration for the responsibility we all have for the world we live in.

The framework for responsible generative AI in SEO

At WTS London, I’ll be sharing more details about our journey towards a more responsible framework for AI implementation in SEO.

For now, here are five considerations to get you started:

1. Understand AI’s role in SEO

AI should assist, not replace human expertise. SEO success isn’t about automating everything—it’s about enhancing workflows while maintaining control and clear QA processes.

2. Set responsible safeguards

Brands need clear AI policies that include:

- AI disclosure in content strategies

- Transparent AI-generated vs. human-written content guidelines

- Consideration of AI’s carbon footprint and sustainability efforts

3. Experiment, but responsibly

Think about your actions when experimenting. Ask yourself questions such as:

- Do I really need to run all the content through the LLM when doing cannibalisation analysis at scale?

- Should I batch-feed the model instead of running everything at once and QA as I go? Spoiler: the answer is always a big fat yes! You should do it to ensure better data accuracy and to make the experiment more responsible.

- Which model is the best one to use for my use case, and how does it perform against some of the known pitfalls such as bias, impact on the environment, and privacy….?

Can I run it differently and, as a result, more responsibly? Ie. running LLMs locally.

4. Avoid AI pitfalls

- Do not blindly trust AI-generated SEO recommendations—always validate

- Do not mass-produce AI-written content without proper human oversight and without adding any additional value. Instead, focus on creating authentic SEO content that brings value!

- Do not ignore Google’s guidelines! It will bite you in the bum eventually.

5. Ideally, get accredited!

Vixen Digital is now pursuing two accreditations – and I suggest you do the same!

- ISO 27100 accreditation – an international standard for information security that helps organisations comply with privacy laws and establish best practices for data privacy.

- ISO/IEC 42001 accreditation – the world’s first certification for responsible AI, solidifying its position as a leader in mindful AI adoption in marketing.

Join me at the Women in Tech SEO Fest London to hear more about responsible LLM use!

- Where: WTS London

- Details: Women in Tech SEO Festival

- Topic: Scaling Smart: Responsible AI Strategies for SEO on a Tight Budget